Stelios Strongylis

An empirical guide on feature prioritization

Intro

A clear prioritized road map in any team can increase focus and provide confidence for the following time.

To be honest there are no magic formulas to prioritize efficiently. You need constant iterations and a combination of methods that suit your business model/strategy. Prioritization reality is based on constant assess-and-reassessment.

There are many methods of prioritization, some very simple and others more complex. If you don’t use roadmap tools that provide an automated solution, it follows a list of popular methods that will suit you:

- Ranking

- MoSCoW Technique

- R.I.C.E. framework

- Analytic Hierarchy Process (AHP)

- 5 Why’s

- Kano Model

- Opportunity Scoring

- Net Present Value (NPV)

- Weighted Scoring framework

- Eisenhower matrix

- Value vs Complexity matrix

There are more that can make this list huge!

For myself finding the business value and making a sanity check of incoming requests works well the combination of MoSCoW method(for grouping) with the Weighted scoring framework(for prioritization). Let’s find out!

Feature Gathering

To move into the first stage of feature gathering, you must be 101% aligned with the vision of your organization and be sure is known and followed across all levels and teams.

After that, you can start gathering product needs and requests from each involved team. Some of the requests are big enough with significant Business value while others are small but help the motor running.

It’s a good practice to group small tasks under one bigger feature umbrella (if possible) to see the bigger picture and have a fair comparison between the backlog and product roadmap.

After that, you can start gathering product needs and requests from each involved team. Some of the requests are big enough with significant Business value while others are small but help the motor running.

It’s a good practice to group small tasks under one bigger feature umbrella (if possible) to see the bigger picture and have a fair comparison between the backlog and product roadmap.

The following prioritization method is based on big features that compose the product roadmap and help us craft the company evolution.

Grouping

A nice way to start cleaning up and grouping requests can be made under MoSCoW method. A method developed by Dai Clegg of Oracle UK in 1994 and first used extensively with the agile project delivery framework Dynamic Systems Development Method (DSDM) from 2002.

MoSCoW stands for:

- Must — Must have this requirement to meet the business needs

- Should — Should have this requirement if possible, but project success does not rely on it

- Could — Could have this requirement if it does not affect anything else on the project

- Won’t — Would like to have this requirement later, but delivery won’t be this time

The o’s in MoSCoW are added to make the acronym pronounceable and are often in lowercase to show they don’t stand for anything.

If you try and stuff too many features in your product it may jeopardize project budgets, timelines, and business goals. MoSCoW will help us decide which requirements are more significant and higher to the “complete-first” list, which can come later, and which to exclude.

Unlike a numbering system for setting priorities, the words mean something and make it easier to discuss what’s important. Most requirements need to provide a coherent solution, and alone lead to project success.

If you often catch yourself with “Should” or “Could” requests try to break them down into smaller features that fall under more than 1 group(s). This will help you and your dev team to receive simpler requests that will save time and provide more qualitative results.

A good example is the automotive “turn signal indicator” development.

Imagine a newly automotive company that designs its new prototype and need to add turn signal indicators to meet the international safety standards. They can break down the total feature in the following tasks:

- A must-have task comes up to have a simple cheap lightbulb flashing every time the driver needs to notify the other drivers around.

- A should-have task continues the previous task that needs a more efficient light that will be seen further and under all weather conditions. So you may need to add a bit more expensive LED lamps in the turn signals.

- And last, a could-have task may come up giving a more visual representation of the action that will follow when a driver indicating her intention to switch lanes.

Ranking

For ranking my framework of choice is Weighted scoring, which uses numerical scoring to rank your strategic initiatives against benefit and cost categories. It is useful and flexible for any type of business looking for objective prioritization techniques.

The “weighted” aspect of the scoring process comes from your definition of certain criteria that are more important than others and, therefore, give those criteria a higher potential weight of the overall score. For example, you might assign more “weight” to the complexity or time-to-implement a given piece of equipment than it assigns to the cost-of-buying-it.

The good thing with this method is, at any given time you change the weights based on the business and/or market needs.

For the following process is mandatory to involve your Manager/Main stakeholder/C-level reporter.

In a product context, weighted scoring prioritization works as follows.

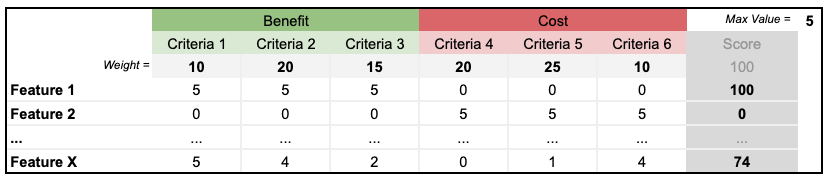

The following screenshot shows an example of using six scoring criteria — three costs, three benefits — on which to rank the relative strategic value of competing product initiatives.

The “weighted” aspect of the scoring process comes from your definition of certain criteria that are more important than others and, therefore, give those criteria a higher potential weight of the overall score. For example, you might assign more “weight” to the complexity or time-to-implement a given piece of equipment than it assigns to the cost-of-buying-it.

The good thing with this method is, at any given time you change the weights based on the business and/or market needs.

For the following process is mandatory to involve your Manager/Main stakeholder/C-level reporter.

In a product context, weighted scoring prioritization works as follows.

- Devise a list of criteria, including costs and benefits, on which you’ll be scoring each initiative.

- Determine the respective weights of each criterion you’ll be using to evaluate your competing initiatives. i.e Let’s say you determine that the benefit “Increase Revenue” should be weighted more heavily in the overall score than the cost “Implementation Effort.” Then you will want to assign a greater percentage of the overall score to Increase Revenue.

- Assign individual scores for each potential feature or initiative, across all cost-and-benefit metrics, and then calculate these overall scores to determine how to rank your list of items.

The following screenshot shows an example of using six scoring criteria — three costs, three benefits — on which to rank the relative strategic value of competing product initiatives.

The value of each criterion indicates the % of the weight we take under consideration. In Benefits when 5 is 100% of the criteria weight and for Costs when 0 is 100% of the criteria weight.

Benefit criteria examples

- Increase Revenue — i.e New clients acquisition, profit channels, CR — Weight: 10

- Strategic value — i.e Full automated process, AI Support — Weight: 20

- Customer experience — i.e Improved UX, increase engagement, less complain — Weight: 15

Cost criteria examples

- Effort — i.e Development hours, complexity — Weight: 20

- Risk — i.e Ease of rollback, pivots, brand threat — Weight: 25

- Operational cost — i.e Increase CS work, extra personnel needed — Weight: 10

Extra thoughts

If you are working in an organization with different products or locales you can merge the product needs to rank the features based on their Global vs Local value. This can be defined by your main business priorities(i.e Growth, Global benefits, Sub-product strategic value).

I don’t wanna spoil the party here, but I must mention that is common priorities to be set in-direct from HiPPos or the C-level team. Involving them from the beginning into the Benefit/Cost and weights definition will save you some time and give you space to make minor corrections to your formula.

After feeling confident with the above — or any chosen — structure of ranking, you can proceed with the OKRs framework to align all teams under the same path of the product.

I don’t wanna spoil the party here, but I must mention that is common priorities to be set in-direct from HiPPos or the C-level team. Involving them from the beginning into the Benefit/Cost and weights definition will save you some time and give you space to make minor corrections to your formula.

After feeling confident with the above — or any chosen — structure of ranking, you can proceed with the OKRs framework to align all teams under the same path of the product.

© 2021-2023 by Product Community Greece